Here at Bandai Namco Studios, we’ve published three CC0 image assets as a product of TrueHDRI, our technical research initiative aimed at faithfully reproducing the lighting environment from real spaces in CG.

We interviewed three members from the team that developed TrueHDRI and heard about how the initiative came to be, some behind the scenes stories, as well as their hopes for the future.

This interview is an enjoyable read even for those who are not familiar with HDRI, so don’t be shy!

Developer Profile

●Masato Kanno (Art Director)

Masato Kanno joined Namco (now Bandai Namco Studios) in 1995 and worked on mainly background art and mecha designs for the Ace Combat series. He took on the role of Art Director for Ace Combat 7. Recently, he built a Lookdev environment using TrueHDRI and shared it within the company.

●Masayuki Suzuki (Engineer)

After overseeing character modeling, animations, background art, lighting, and shaders, Masayuki Suzuki is now working as a TA on graphics-related research and provides support across multiple projects.

●Shohei Yamaguchi (Technical Director)

Shohei Yamaguchi joined the Japan Post Production Association as a CG Technical Director in 2012 and has worked on commercials and movies. He was in charge of color management, live-action filming, providing technical support for composites, and tool creation. In 2019, he became interested in real-time graphics and joined Bandai Namco Studios. He now works on lighting and provides related technical support, as well as research, as a TA.

TrueHDRI: Incorporating Real-life Lighting into CG Spaces

― Great work on publishing the TrueHDRI image assets! I would like to talk in depth on the journey from doing this research to publishing it. Let’s start with the basics – what kind of technology is TrueHDRI?

Kanno: To put it simply, TrueHDRI is a technology that allows us to incorporate real-life lighting into CG spaces.

Light from real life ranges from the extremely bright sun to the lights in our office, to even fireflies – there’s a huge variation in brightness between various sources.

We call that range in brightness “dynamic range”, and it changes depending on whether it’s midday, nighttime, evening, or indoors, etc. TrueHDRI is able to incorporate that dynamic range thoroughly into CG spaces.

Development Teams Using HDRI Needed to Know the “Correct” Lighting

―How did you incorporate lighting into your work before developing TrueHDRI?

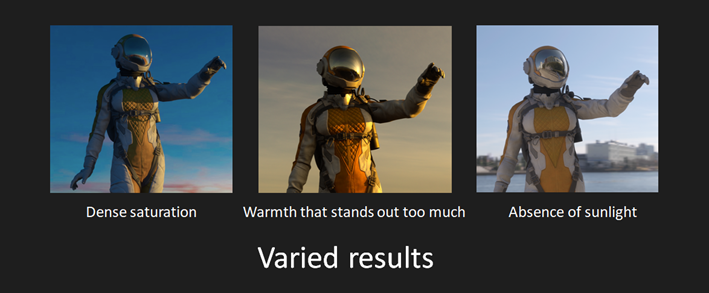

Kanno: We used a technology called Image Based Lighting (IBL), which reconstructs a photo taken with a camera on a computer and adds lighting to illuminate the space. This technique has existed for a while, but we had mixed results depending on who was working on it. For example, the sunlight would be absent, or that it would be too blue.

When I was working in ACE COMBAT 7, the intensity of the lights during the day, at night, and in the hangar (where the fighter jets are parked), varied from person to person.

Sometimes parts that light up on the fighter jets look too bright for daytime, or something placed indoors shines too brightly. We were constantly searching for a standard we can apply across the board when it comes to lighting.

Suzuki: On the project I worked on in 2018, the creators were always lamenting that they did not know what the “correct” lighting should look like. Many of us were modeling in a development environment without clear standards.

That means everything looks different in the live environment. Many of the developers asked for a development environment that allowed them to see what things actually look like.

Until we had TrueHDRI, we downloaded HDRIs and manually adjusted them to match our needs, and eventually decided to create and shoot our own to be sure of their accuracy.

― I see, that’s how the TrueHDRI technology research started.

Kanno: I mentioned dynamic range earlier, but the brightness of a light can be expressed in numbers.

And the largest value for brightness is the sun, which is 1.6B cd/㎡ (candela per square meter). If you attempt to take a photo of that, it’ll be too bright and will wash everything out.

Yamaguchi: From 0 cd/㎡, which is pitch black, to the sun at 1.6B cd/㎡. TrueHDRI started with the concept of incorporating this full dynamic range directly into CG.

Research that started with reassessing shooting equipment, along with a handmade neutral density filter!

Kanno: We tried out a 360-degree camera that had just been released at the time and found that along with the convenience of being able to capture the entire surroundings in one go came with some issues that needed to be solved.

It didn’t get us the resolution that we expected and we were unable to capture bright objects like the sun. This was brand-new equipment, but we realized that it did not fit the direction we wanted to take our research.

So, we decided to reassess our methods for capturing accurate HDRI – which included the equipment – and started full swing on our research in the autumn of 2018.

Yamaguchi: It all started with the camera. Reliability is key for TrueHDRI.

We hear the term “4K” being thrown around a lot these days, but TrueHDRI actually has a width of 16K pixels and a height of 8K pixels! We selected a camera that can capture in a resolution that high.

The equipment needed to fulfil two requirements: a dynamic range of 0 to 16B, and a resolution of 16K.

Kanno: Next was the neutral density filter, or the ND filter, which is an accessory that can lowers the amount of light that the camera picks up and results in a darker capture.

Fish-eye lens and wide-angle lens, which we often use in shooting HDRI assets, usually have the filter at the back between the lens and the sensor. It became quite a hassle to put it on and take it off when we were shooting frequently in multiple places.

That’s when I made one that can be easily attached and detached.

Yamaguchi: The filter itself was purchased, but Kanno made the frame to attach it to the camera like a pro.

Kanno: I made the most out of cardstock and spike tape (adhesive tape used on set). (laughs)

Mifune Bridge, Eitai Bridge, and BNS Café: Three Distinctive Materials from Three Locations

*BNS is the abbreviation for Bandai Namco Studios

― I see that your methods took shape through a process of trial and error.

Kanno: Around January of 2019, I thought that this method would give us the most satisfactory results. The assets of Eitai Bridge available in the TrueHDRI library on our website were captured then.

― Did you scout out several locations before that?

Kanno: Yes, from domestic to international locations. From the night view of Shinjuku to yards surrounded by trees, to the shores in Okinawa – we shot at many different places.

I even took captures in my father’s paddy field in the middle of winter in my hometown Yamagata. We were surrounded by snow, so it turned out to be great reference material for recreating the blue of the sky and the white light reflected from the ground in CG.

Also, I took every chance I got to take captures during the down time when on business trips to the UK and the US for ACE COMBAT.

The equipment was quite bulky and bringing it around was challenging, but I was able to capture spaces and atmospheres not found in Japan.

We also stood out quite a lot when shooting out in public, so we were sometimes questioned by security guards too. One time, we were taking captures on the beach, and some locals came up to ask if we were collecting seashells. (laughs)

Suzuki: When we compared the captures from all the different locations, we realized that Eitai Bridge actually encompassed a variety of materials.

Kanno: The sunny blue sky of course, but also the backlit buildings on one side and forward lit buildings on the other, and the greenery when you look to the side. Sumida river flows from the left, and there’s also reflections from the water.

The sidewalks along the riverbanks are tiled in familiar patterns, and I thought such ordinary, everyday spaces would become the standard when reproducing real-life scenes in CG.

Yamaguchi: In the end, we decided on Eitai Bridge for a sunny outdoors asset, Mifune Bridge for a nighttime city asset, and BNS Café (café space inside Bandai Namco Studios) for an indoors asset.

Suzuki: We were able to achieve a well balance between the brightest with Eitai Bridge, the darkest with Mifune Bridge, and the BNS Café in the middle.

It’s hard to tell just from looking at the photos, but the difference in brightness is about ten-thousand times. It’s easier to tell in Photoshop.

Suzuki: In reality, cameras enhance color and contrast when used normally, so we had to capture in a way that gets rid of that.

Yamaguchi: Generally, with HDRI, the brightness is already adjusted when you open it up in Photoshop.

A key feature of TrueHDRI is the brightness of the real space is captures as is – where dark scenes are dark and bright scenes are bright. We call this “correct exposure”.

How to use a camera as a device to measure light? Deriving a formula for vignetting

―Were there any other challenges?

Yamaguchi: Didn’t Suzuki have a hard time making a process to obtain the correction value?

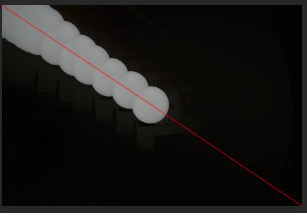

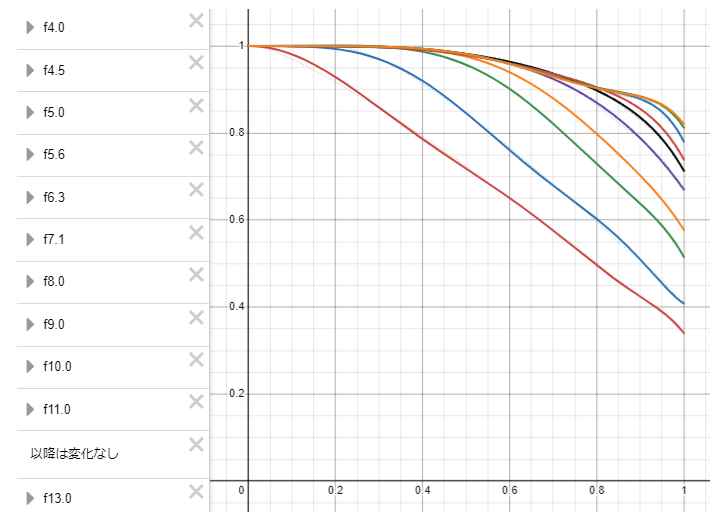

Suzuki:Yes… Vignetting at the camera lens made things appear darker the closer you get to the corners, and it was necessary to correct the vignetting.

I got the values by measuring them individually to identify what extent vignetting was occurring and how to correct it.

Yamaguchi: Suzuki-san had been researching on how to correct vignetting so we could use the camera as a device to measure light.

Suzuki: We plotted the amount of vignetting by taking a series photos using a light and moving it a constant amount to the edge of the frame. The light gradually becomes darker and I plotted the data.

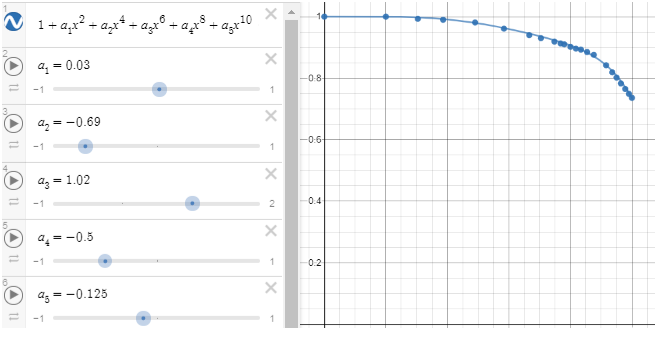

The left side (of the third image) is the formula to correct vignetting and the right side is the formula plotted. Areas where the data dips represent darkened areas due to vignetting, so the idea was to correct vignetting by changing the data trajectory to form a straight line.

Kanno: We actually struggled a lot with moving the light source the same distance for every step.

The we used a fisheye lens, so it was impossible to cover the image with a flat white sheet of paper.

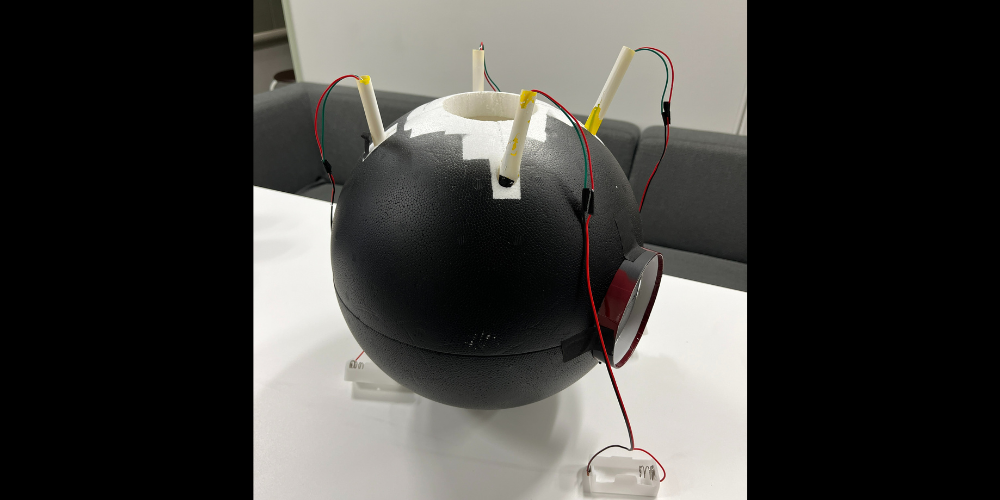

I got creative and made a sphere (integrating sphere) out of a Styrofoam, put the camera inside it and took pictures from the sphere. I installed little light bulbs inside the sphere equidistantly for uniform light scattering to maintain a uniform brightness, so no shadows are created.

―Even the integrating sphere was hand-made!?

Kanno: It was no easy feat. If I missed a spot, I had to repaint the sphere in white all over again.

And when I thought I was going nowhere with this sphere, Suzuki suggested to put the camera into a round ceiling light plate. That was my eureka moment. It was a continuous process of trial and error. (laughs)

Taking up the challenge to create a credible lookdev environment using a game engine

―I understand now that TrueHDRI assets were created through a repeated process of taking photos under different environments and measuring their values.

Kanno: Since 2019, we worked to create a lookdev environment utilizing TrueHDRI to raise awareness of how good the technology is. Lookdev is a process for defining the texture of a CG model.

Yamaguchi: The general idea is to check on the lookdev environment if the object has the correct texture.

For example, let’s place objects with different textures in three locations using the released. We’ll switch between the different environments to see if metallic objects look consistently metallic, plastic objects consistently look plastic, or if light from a light bulb is consistently bright. This allows you to configure object textures so they look right under any environment.

Kanno: We believed if we create a TrueHDRI-enabled lookdev environment that could be run on a game engine, it could help us gain credibility with other teams.

Suzuki: Our internal development teams have started to seriously start using our lookdev environment since April the same year.

Bringing Bandai Namco Studios research and technology to the world! What led to the decision to release the TrueHDRI assets?

―What led to the CC0 release of TrueHDRI, an inhouse-developed technology?

Kanno: This technology was initially a BNS internal research initiative, but we shared this technology to other development teams other than the Ace Combat team, and since then the technology started to spread.

We were encouraged to make this technology public, so we showed this technology to other companies and let developers use the environment when doing joint research.

As we continued these efforts, we realized we could just release the assets to the whole world in hopes of advancing CG, rather than relying on word of mouth. That’s why we put the assets out there in hopes that our efforts will pay off.

Suzuki: I had a hard time with the many HDRIs out in the wild and witnessed so many teams go through the same struggles I went through. I hope such circumstance would improve with the release of these assets.

Yamaguchi: It would make us happy if everyone used our assets for their creations.

The pitch is that your creations will be more consistent if you use TrueHDRI. So, we hope everyone will use and be onboard with TrueHDRI, and eventually it will become the standard.

It’s like recruiting people for a party.

What color is the sky for you? The real value of TrueHDRI is in team communication

―Thank you for sharing stories from the beginning of development up to the assets’ release! For the last part of the interview, is there anything else you want to share on the ever-evolving TrueHDRI technology

Yamaguchi: We plan to add more variations of the image assets moving forward.

There are still many people who struggle with TrueHDRI, so we hope to publish documentation and a sample scene.

TrueHDRI can also be used as educational materials to learn about correct lighting.

We hope everyone measures the values of various locations on these assets to deepen their understanding of lighting in real spaces.

Suzuki: TrueHDRI is a technology that can make development more efficient.

Even in BNS, teams leveraging lookdev vs teams that don’t have a stark contrast as to the time spent working on textures.

We hope everyone will use it so they can see for themselves firsthand.

Kanno: We actually use TrueHDRI to develop our games as well. I believe that our efforts to build an environment for creating realistic visuals was made possible by this technology.

You should be able to see the results of our efforts soon, but the real value lies in the communication created among our developers.

TrueHDRI can be a benchmark to highlight what is real for things that change depending on perception, such as the color of the sky, or how bright a light should be.

By extension, this should lead to improving developer performance, we truly hope it will be used by other teams.

―Thank you for sharing such an incredible story. For our readers, detailed information of TrueHDRI is also available on the Bandai Namco Studios website, specifically the technology showcase section, so be sure to check that out as well!